Welcome to the project page of

Automatic Lecture Recording

Camerman module - details

We decided to use Axis cameras and video servers because they provide M-JPEG and MPEG-4 streams in parallel. So the MPEG-4 stream is used for broadcasting or recording while the M-JPEG stream is used to achieve single JPEG images easily. These JPEG images are used for the image processing.

For the lecturer and the audience we use PTZ-cameras with a build-in video server, whilst for the long shot we use an already existing Canon PTZ-camera in combination with an Axis video server. To enable slide recording we use a scan converter from Canopus in combination with another Axis video server. Thereby, all four video streams (lecturer, audience, long shot, and slides) are provided as M-JPEG streams as well as MPEG-4 streams in parallel. So, the same algorithms can be used.

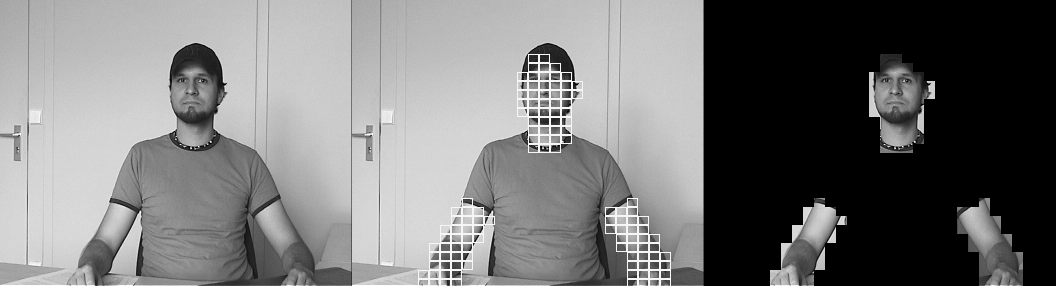

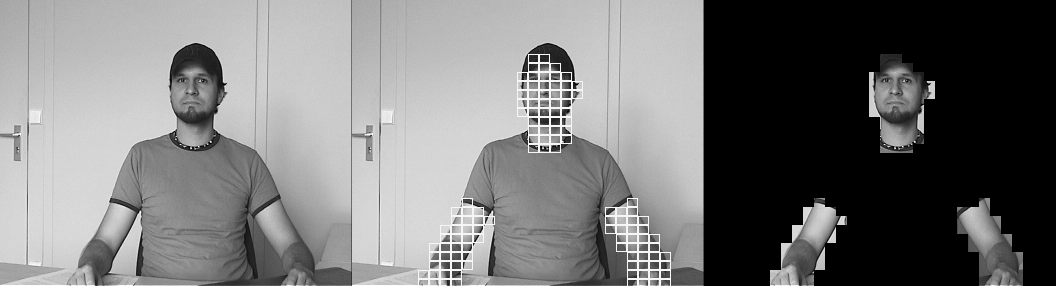

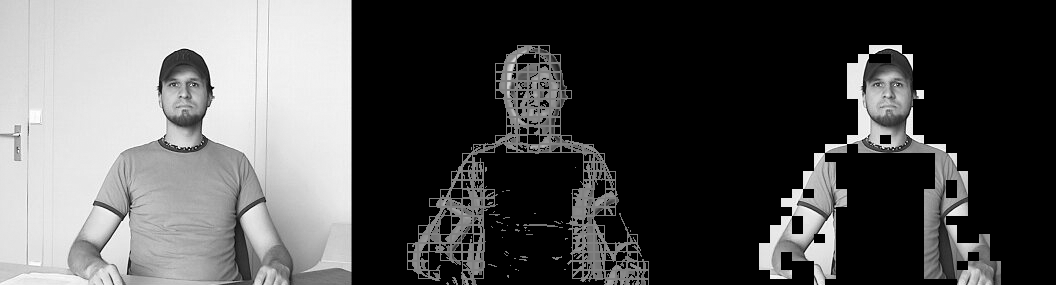

The image processing is more or less basic: calculating histograms, provide noise reduction, subtract images, edge detection, motion detection, and skin colour detection. In addition, image segmentation in rectangles and splitting and joining them is implemented. But all algorithms are adapted to work in real time, so the main delay is given by the streaming buffer of the video server.

Please see two examples of skin color and motion detection:

Skin Color Detection Example